Introduction

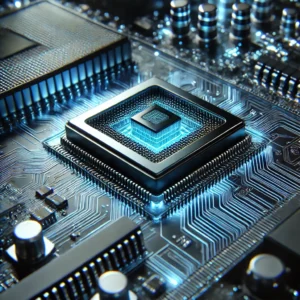

In the fast-evolving landscape of chip and hardware technologies, advancements in artificial intelligence (AI), quantum computing, and semiconductor engineering are reshaping the future. These innovations not only drive computational efficiency but also redefine industries from healthcare to autonomous systems. This article explores the latest breakthroughs and their profound impact on technology.

The Evolution of Semiconductor Chips

Moore’s Law and Its Impact

Moore’s Law, proposed by Gordon Moore in 1965, predicted that the number of transistors on a chip would double approximately every two years, leading to exponential growth in computing power. While this trend has faced physical limitations, recent innovations, including 3D stacking, extreme ultraviolet (EUV) lithography, and nanosheet transistors, have pushed the boundaries of Moore’s Law.

External Resource:

Intel’s Moore’s Law Explanation

AI and Machine Learning Chips

The rise of AI has driven demand for specialized chips like graphics processing units (GPUs), tensor processing units (TPUs), and neuromorphic processors. Unlike traditional CPUs, these chips optimize machine learning tasks, improving efficiency and power consumption.

Example Applications:

- NVIDIA’s AI GPUs are widely used in deep learning models.

- Google’s TPUs power AI applications like Google Search and Google Translate.

Internal Link:

Learn more about AI processors in our latest AI technology article.

Quantum Computing: The Next Frontier

How Quantum Chips Work

Unlike classical computers that use binary bits (0s and 1s), quantum computers leverage qubits that exist in multiple states simultaneously due to superposition. This enables them to solve complex problems exponentially faster.

Key Players in Quantum Computing:

- IBM Quantum – Developing commercial quantum computers.

- Google’s Sycamore Processor – Achieved quantum supremacy.

External Resource:

IBM Quantum Computing Research

Emerging Trends in Hardware Technology

Edge Computing and IoT Processors

Edge computing reduces latency by processing data closer to the source, eliminating the need for cloud-based computations. AI-driven IoT chips enhance smart home devices, industrial automation, and autonomous vehicles.

Key Benefits:

✅ Reduced latency in smart applications

✅ Lower power consumption

✅ Enhanced security with decentralized processing

Neuromorphic Computing

Neuromorphic chips mimic the human brain’s neural networks to process information more efficiently than traditional chips. Companies like Intel (Loihi Chip) and IBM (TrueNorth Chip) are pioneering this technology.

Challenges in Chip Development

Supply Chain Constraints

The global semiconductor industry has faced supply chain disruptions, impacting electronics manufacturing. Companies are investing in domestic chip production to reduce reliance on external suppliers.

Sustainability Concerns

Modern chip manufacturing requires substantial energy and water resources. Researchers are exploring eco-friendly fabrication methods and alternative semiconductor materials, such as graphene and gallium nitride.

Conclusion

The future of chip and hardware technologies is being shaped by AI, quantum computing, and advanced semiconductor research. As these technologies evolve, they will revolutionize industries and drive unprecedented computational capabilities. Businesses and researchers must stay ahead by adopting cutting-edge hardware solutions and leveraging AI-driven innovations.

Recommended Reading: